Musk's OpenAI training robots to think like humans in virtual reality (VIDEOS)

Not content with revolutionizing the online payment, energy production and storage markets and private space industry, Elon Musk has now taken to training machines to learn like humans but in virtual reality.

OpenAI, Musk’s latest venture, is a non-profit organization with $1 billion in funding, the aim of which is to create ‘friendly’ Artificial General Intelligence (AGI).

Artificial Intelligence has preprogrammed outcomes and makes decisions based on specific inputs. This is why regular AI is considered ‘narrow’ or ‘weak’ within the industry, as it’s highly limited in what it can achieve.

Artificial General Intelligence, on the other hand, makes broader use of inputs in much the same way as humans do, by making mistakes until the correct outcome is achieved and emphasized through reinforcement. This constitutes ‘Real’ or ‘Strong’ AI.

UK Police use ‘Robocop’ to judge whether suspects are jailed or bailed https://t.co/U3q7WeJ11Npic.twitter.com/QqZM1OMOfj

— RT (@RT_com) May 11, 2017

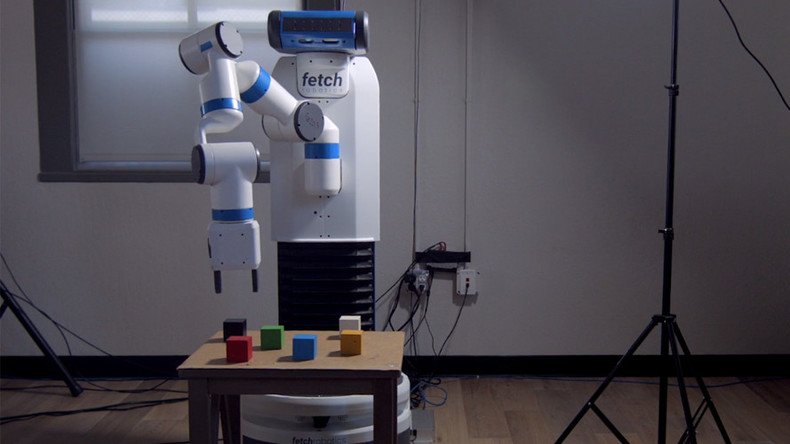

The team at OpenAI has trained a self-learning algorithm in Virtual Reality (VR) to carry out a task, although it’s nothing crazy just yet; just stacking colored blocks in a series of towers.

"We've developed and deployed a new algorithm, one-shot imitation learning, allowing a human to communicate how to do a new task by performing it in VR," OpenAI wrote in a blog post on Tuesday.

The algorithm is powered by two neural networks: a vision network and an imitation network, both of which mimic the processes which take place in the human brain.

‘AI to take over 50% of all jobs in next 10yrs’ – Chinese technologist https://t.co/p9pOQi95eSpic.twitter.com/BHY6qsJ1Je

— RT (@RT_com) April 30, 2017

The vision network contains hundreds of thousands of simulated images that combine a wide variety of shapes, sizes, colors and textures, in addition to ambient lighting effects.

The vision system is never trained using real images as this would be far too time and cost-intensive for the research team. It also means that the vision system is not dependent on replicating exact scenarios it has seen before but can instead respond to an immense variety of circumstances.

The algorithm "observes a demonstration, processes it to infer the intent of the task, and then accomplishes the intent starting from another starting configuration," the team added in its blog.

The way in which the algorithm learns has been dubbed ‘one-shot imitation learning’ in which a human demonstrates a task in VR and the learning algorithm immediately replicates the task in the physical world from an arbitrary starting point.

While it's hardly a technological miracle just yet, it all has to start somewhere and these ‘baby steps’ are just the first in what will be a long and exhaustive process of educating AGIs to assist humans in the real world.

“Infants are born with the ability to imitate what other people do,” Josh Tobin, a member of OpenAI’s technical staff said. “Imitation allows humans to learn new behaviors rapidly. We would like our robots to be able to learn this way, too.”

“With a single demonstration of a task, we can replicate it in a number of different initial conditions. Teaching the robot how to build a different block arrangement requires only a single additional demonstration.”

READ MORE: Elon Musk’s Neuralink could represent next stage of human evolution

The research team also realized that, in order for the algorithm to be effective in real-world environments, it would need to manage incomplete data and imperfect scenarios. In order to achieve this, they introduced ‘noise’ to the code or ‘policy’ which governs the algorithm, so that it would be forced to adapt when things go wrong, which they invariably do.

“Our robot has now learned to perform the task even though its movements have to be different than the ones in the demonstration,” explains Tobin. “With a single demonstration of a task, we can replicate it in a number of different initial conditions. Teaching the robot how to build a different block arrangement requires only a single additional demonstration.”

The value of such adaptability cannot be understated given that training in VR allows researchers to create any number of potential environments for future robots to develop multiple skillsets.

Examples could range from search and rescue operations in the aftermath of an earthquake, to conducting research in nuclear fallout zones such as Fukushima or even navigating the rocky terrain on Mars to carry out repairs on a stricken rover.